-

This is 100% true! Data you create prob isn't that valuable on an individual basis. Economies of scale etc... but increasingly I am thinking this is not the only way that personalization systems cause you to labor in such a way that they extract value. There's... negative data. Ryanbarwick/1600862820693725185

-

I'm not the first person to note that Amazon and Google have become incredibly ad heavy as you enter the page and find the thing you are searching for buried under ads. I'm sure you've noticed there and on other platforms and wondered: why does this look like sh*t?

-

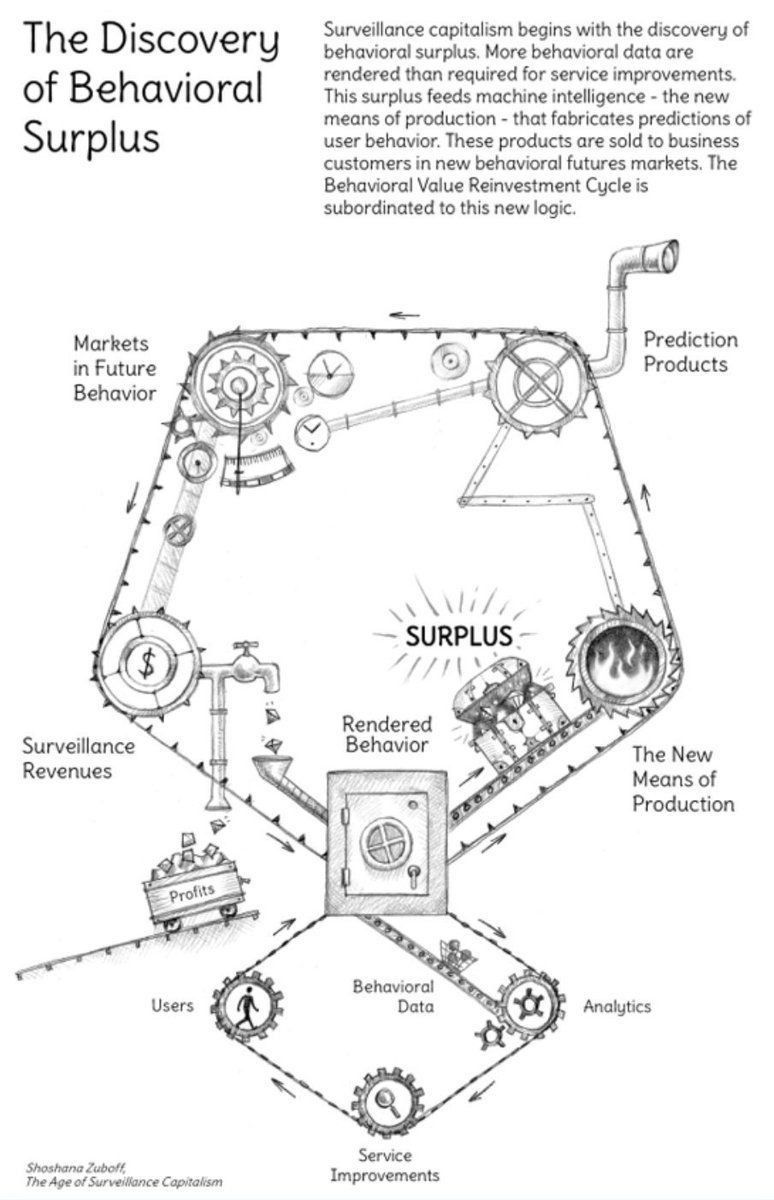

Now, I cannot recommend The Age of Surveillance Capitalism, but it does have a very useful construct of "behavioral surplus". The idea is: at some point in the early eras of platforms like Google they would design things, test them, see reactions and improve the platform...

-

When platforms became interested in rendering behavior into currency in order to sell ads, they inevitably came up against a supply issue - they wanted to grow infinitely but users would only do so much on the platform and even vast capture through expansion had limits so...

-

They realized the process by which they improved their sites based on user behavior could be reversed. Instead, they could use predictions about ads to force you to labor against the design of the site in order to render more accurate user behavioral data...

-

If you click on one of the many ads above the fold, well then that's a victory, ad payout, and preference re-assurance. But, while the behavioral marketplace proposes to predict the Future of You(r behavior) it is way easier to *create* the future than to predict it...

-

So, design optimization moved to become in service of the ads, not the users, & more and more ads were placed between you and the meat of the service you wished to access in an attempt to force you to comply with the predictions of your interests made by the targeting technology

-

The result is that they can present an increasingly precisely targeted set of ads, forcing you to either reveal a precise preference of high value, or...

-

You end up being forced to go through the labor (because it absolutely is work, that is being done to earn them money) of negotiating thru heavy ads to click on the right result, which renders even higher value preference data that can then be used to make targeting more precise.

-

This work creates what is arguably a single data point, low value on paper, but it is surrounded by your negative data, the labor you did to negotiate through the many other predictions that the targeting system wanted to get you to either state preference for or avoid...

-

and this precision creates *immense* pressure on the ad tech ecosystem to increase precision and drives up the overall pricing the platform can ask for its ads, with huge value for the platform... Chronotope/1524869229748576267

-

But that huge value for the platform isn't out of nothing. It is created from your *labor* on the *platform*. When you negotiate the ads in Amazon to buy the thing you really want, you are increasing their accuracy in ways that pay out significantly, even on the scale of 1 person

-

And that value for the platform is created out of your labor. And we *do* have models to understand how labor should be compensated. And how we should negotiate about it...

-

Because the problem of platforms isn't that they should pay for your data. It's that they should be forced to negotiate for your labor and the conditions under which it happens. Because the data isn't just the data, it's the data that is created by you working *their* machines...

-

This is the fundamental problem of how every "data union" or "data coop" works. They ape the concept of unions, but they aren't unions. Unions negotiate for the conditions of labor, they don't sell the product of it. The point is to have a voice in the conditions of your labor...

-

As we see today(!) the point of unions is to build solidarity for action, equity, co-ownership, mutual aid. None of the entities that present themselves as "data unions" are interested in that, so of course they are useless. They're just interested in building another middleman

-

If they were more than looking to become rent collectors on big tech, they would be arguing for data deletion, checkout, interop, all real things that could change the conditions of the labor we feed into platforms.

-

Software isn't ever perfect. Just like ML trains on millions of inputs, so does all software. It turns out that user data is the labor that powers the information economy and that economy oscillates regularly between asking for more traceable interactions and inception of choice.

-

A real data union wouldn't just ask for compensation, it would ask for total data flow transparency. It would require algorithmic: transparency, interoperability, deactivation and choice. It would be asking for equity in the firms that *you* are building with *your* labor.

-

Negative data is all the work you must do in order to deliver to the platform that seemingly low value data point. But that labor has value. Your time has value. Your work has value. You deserve a share of the profits. Instead you get ever worsening labor conditions.

-

Data Unions don't acknowledge that. They're how a capitalist thinks a union works, workers just want more of the precious money. That's not how unions work, and it isn't how the handling of your data works.

-

Once you realize that, you realize that these trade offs of cash for your data are never in your favor.

-

No ethical clicks under capitalism.

-

(Another good convo on this here: Chronotope/1503378522701762562 )

Chronotope’s Twitter Archive—№ 161,432

Chronotope’s Twitter Archive—№ 161,432